New AI technology will be used to screen images of children in ‘unsafe’ situations. Photo: File.

Did you know your childhood photos could help artificial intelligence combat child exploitation online?

We’re being asked to send pictures to a world-first ethically sourced and managed image bank to train AI to recognise images of children in ‘unsafe’ situations on the web and potentially flag abuse material.

The My Pictures Matter project is an initiative of the AiLECS Lab (AI for Law Enforcement and Community Safety), a collaboration between the AFP and Monash University focused on developing technologies that help our law enforcement agencies keep the community safe.

AiLECS Lab co-director and AFP leading senior constable Dr Janis Dalins said the ultimate goal for creating AI technologies to detect and triage child sexual abuse material was to identify victims and material previously unseen by law enforcement quickly.

“This will enable police to intervene faster to remove children from harm, stop perpetrators and better protect the community,” she said.

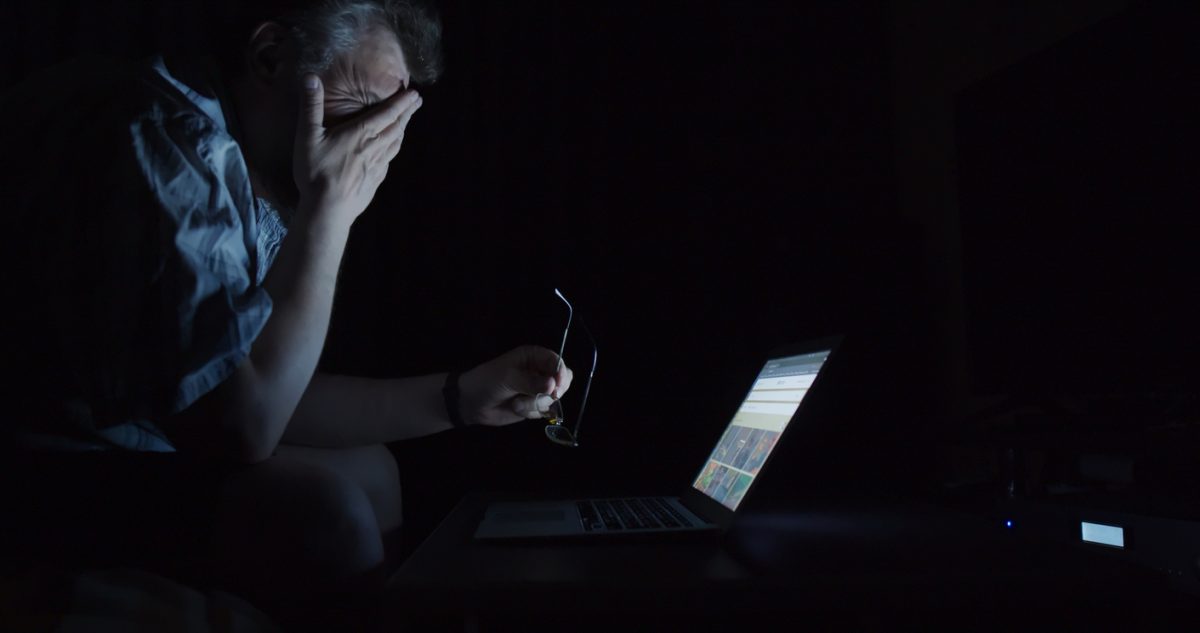

The technology will also assist officers who have to sift through large amounts of “horrific” child abuse material by minimising their repeated exposure to such images.

“In 2021, the AFP-led Australian Centre to Counter Child Exploitation (ACCCE) received more than 33,000 reports of online child exploitation and each report can contain large volumes of images and videos of children being sexually assaulted or exploited for the gratification of offenders,” Dr Dalins said.

“Reviewing this horrific material can be a slow process and the constant exposure can cause significant psychological distress to investigators. AiLECS Lab’s initiatives will support police officers and the children we are trying to protect, and researchers have thought of an innovative way to ethically develop the technology behind such initiatives.”

AiLECS Lab co-director Associate Professor Campbell Wilson said “machine learning models” trained using images of people taken from the internet or without documented consent for their use.

“To develop AI that can identify exploitative images, we need a very large number of children’s photographs in everyday ‘safe’ contexts that can train and evaluate the AI models that are intended to combat exploitation,” he explained.

“But sourcing these images from the internet is problematic when there is no way of knowing if the children in those pictures have actually consented for their photographs to be uploaded or used for research. By obtaining photographs from adults through informed consent, we are trying to build technologies that are ethically accountable and transparent.”

Researchers ask only people aged 18 and above to contribute photos to the campaign.

Project lead and data ethics expert Dr Nina Lewis said no personal information of contributors will be collected other than their email addresses which will be stored separately from the supplied images.

“The images and related data will not include any identifying information, ensuring that images used by researchers cannot reveal any personal information about the people who are depicted,” she said.

Those who contribute their pictures can choose to receive details and updates about each stage of the research and can opt to change their use permissions or revoke their research consent and images from the database at a later date.

“While we are creating AI for social good, it is also very important to us that the processes and methods we are using are not sitting behind an impermeable wall,” Dr Lewis said.

“We want people to be able to make informed choices about how their data is used and to have visibility over how we use their data and what we are using it for.”

The ‘My Pictures Matter’ campaign will also help develop technologies to better protect survivors of abuse from ongoing harm and identify and prosecute perpetrators.

Researchers aim to have at least 100,000 ethically sourced images in the database for the AI training algorithm by the end of 2022.

The ACCCE has identified more than 800,000 registered accounts using anonymised platforms (such as the dark web and encrypted apps) solely to facilitate child abuse material since the beginning of the COVID-19 pandemic.

Nationally, the AFP arrested 233 people and laid 2032 alleged child abuse-related charges in 2021. Investigations resulted in 114 children removed from harm, 65 internationally and 49 domestically.

In 2020, a total of 214 people were arrested and 2217 alleged child abuse-related charges were laid. Investigations resulted in 221 children being removed from harm, 130 internationally and 91 domestically.

An AFP spokesperson said online safety for children and young people was an increasing concern around the world.

“The increase in young people, including children and infants, accessing the internet has seen a corresponding upward trend in cases of online child sexual exploitation,” they said.